Will predictions of advancements in medicine follow? Predictably so:

IBM's Watson Supercomputer Beats Humans in Jeopardy Practice Match

eWeek.com

By: Fahmida Y. Rashid

2011-01-13

Watson, IBM's latest DeepQA supercomputer, defeated its two human challengers during a demonstration round of Jeopardy on Jan. 13. The supercomputer will face former Jeopardy champions Ken Jennings and Brad Rutter in a two-game, men-versus-machine tournament to be aired in February.

However, the Jeopardy match-up was not the "culmination" of four years of work by IBM Research scientists that worked on the Watson project, but rather, "just the beginning of a journey," Katharine Frase, vice president of industry solutions and emerging business at IBM Research, told eWEEK.

Supercomputers that can understand natural human language—complete with puns, plays on words and slang—to answer complex questions will have applications in areas such as health care, tech support and business analytics, David Ferrucci, the lead researcher and principal investigator on the Watson project, said at the media event showcasing Watson at IBM's Yorktown Heights Research Lab.

Watson analyzes "real language," or spoken language, as opposed to simple or keyword-based questions, to understand the question, and then looks at the millions of pieces of information it has stored to find a specific answer, said Ferrucci.

This is undoubtedly a remarkable accomplishment.

Indeed, accompanying the announcements we are also seeing predictions that such supercomputers "will have applications in health care."

Indexing of the medical literature, and data mining (for better or worse) from free text come to mind.

However, the current irrational exuberance about healthcare IT in 2011 is based on several misconceptions. This leads to predictions such as this ...

The technology has to process natural language to understand "what did they mean" versus "what did they say," which has a lot of implications in the health care sector, said Frase. Patients are not using the terms doctors learned in medical school to describe their ailments, but more likely the terms they picked up from their parents growing up, she said.

"Patients are not using the terms doctors learned in medical school to describe their ailments"?

What medical school(s), exactly, are being spoken of here?

It seems as if IT folks think medicine was invented just yesterday. In fact, in medical school, internship, residency and practice we learn all about that, and learn how to 'translate' that information or use it to elicit more information as needed in order to provide care. I'm not sure a multimillion dollar supercomputer is needed for that ...

... and related "platform database" predictions such as this:

... A Watson-like system can take that information and co-relate it against all the medical journals and relevant [who decides that? - ed.] information, and say, "Here's what I think [think? -ed] and why," while showing its evidence for how it came up with the conclusion, according to Frase.

(Actually, computers don't think. A more correct statement would be "here are the results of the algorithms that your faithful machine has crunched, using the medical literature as input.")

That's quite naïve and idealistic with regard to actual medical decision making. It is a computer technician's oversimplified, reductionist, amateur view regarding biomedicine, a domain of often wicked complexity.

As I intimated above, one key issue is what is "relevant" with regard to information.

Consider the issue of the medical literature suffering from numerous conflict of interest and dishonesty-related phenomema making it increasingly untrustworthy, as pointed out by Roy Poses in a Dec. 2010 post "The Lancet Emphasizes the Threats to the Academic Medical Mission", at my Aug. 2009 post "Has Ghostwriting Infected The "Experts" With Tainted Knowledge, Creating Vectors for Further Spread and Mutation of the Scientific Knowledge Base?" and elsewhere on this blog.

Then too, there are plausibility issues in medical research, as expressed in the paper "Why Most Published Research Findings Are False", John P. A. Ioannidis, PLoS Medicine 2(8): e124, 2005. Dr. Ioannidis observes:

There is increasing concern that most current published research findings are false. The probability that a research claim is true may depend on study power and bias, the number of other studies on the same question, and, importantly, the ratio of true to no relationships among the relationships probed in each scientific field. In this framework, a research finding is less likely to be true when the studies conducted in a field are smaller; when effect sizes are smaller; when there is a greater number and lesser preselection of tested relationships; where there is greater flexibility in designs, definitions, outcomes, and analytical modes; when there is greater financial and other interest and prejudice; and when more teams are involved in a scientific field in chase of statistical significance. Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias.

Dealing with these very real-world issues in patient care requires nothing less than human judgment borne of experience, critical thinking skills (emphases on "thinking", which computers cannot do, sorry all you HAL-9000 and M5 fans) and intuition to manage. "Garbage in, garbage out" applies in the extreme.

(The Ultimate Computer, Dr. Richard Daystrom's mid-23rd century Multitronic unit, M5. Fun to watch it beat up on starships Excalibur, Hood, Lexington and Potemkin due to malfunction. Even more fun to watch William Shatner talk it into cybernetic suicide via guilt, and yes, as a teen I knew all the ST trivia cold, but I think sci-fi has gone too far into people's heads.)

(The Ultimate Computer, Dr. Richard Daystrom's mid-23rd century Multitronic unit, M5. Fun to watch it beat up on starships Excalibur, Hood, Lexington and Potemkin due to malfunction. Even more fun to watch William Shatner talk it into cybernetic suicide via guilt, and yes, as a teen I knew all the ST trivia cold, but I think sci-fi has gone too far into people's heads.)There are also other computer-vs-human mind issues at play.

First, let's look at Watson. Just to play the TV quiz show game show Jeopardy, a game largely about the knowledge and recall of trivia, it took the following:

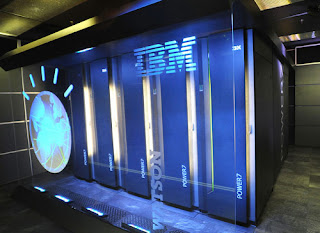

- Watson is a breakthrough human achievement in the scientific field of Question and Answering, also known as "QA." The Watson software is powered by an IBM POWER7 server optimized to handle the massive number of tasks that Watson must perform at rapid speeds to analyze complex language and deliver correct responses to Jeopardy! clues. The system incorporates a number of proprietary technologies for the specialized demands of processing an enormous number of concurrent tasks and data while analyzing information in real time.

- An entire team of "algorithm specialists" writing algorithms specialized to the purpose of "the special question processing" needed to answer Jeopardy questions, such as: "On Sept. 1, 1715 Louis XIV died in this city, site of a fabulous palace he built", and "To marry Elizabeth, Prince Philip had to renounce claims to this southern European country's crown."

-

Watson took about four years to build and is made up of 10 racks of [multiprocessor - ed.] IBM servers running Linux with 15 terabytes of memory [presumably fast-access RAM, not hard disk -ed.], the report said. [as per the LA Times - ed.] Over those four years, IBM has filled Watson with about 200 million pages' worth of information from encyclopedias, dictionaries, books, news and movie scripts, the AP said.

Second, it will be many years indeed before even the current Watson QA capabilities can be tailored to a domain as complex as biomedicine and made widespread for the hundreds of thousands of practicing physicians and the much larger number of allied healthcare professionals in the U.S. or worldwide.

Third, there's this from the same LA Times article linked above:

- Like its human competitors, Watson won't have Internet access [or access to anything outside its immense local storage - ed.] during the games, so Googling an answer won't be an option, the report said.

I see a significant degree of machine-human unfairness right there. A computer has 100% reliable access to information in its storage media. The human mind does not. That's appropriate for a TV game show that tries to test a persons' knowledge of trivia, but that's not how medicine works.

What if the match were made more fair, giving the humans error-free access to the same information Watson has stored in its own 15 terabytes and 200 million pages?

Fourth, medicine is not about recalling trivial factoids of information based on parsing natural language queries in the "puzzle format" of Jeopardy. As I wrote here and here, medicine is not a platform database information retrieval problem. (I would argue medicine, except in simple cases, is in large part a matter of filtering the irrelevant, unlikely, and unreliable, of which there is an exponentially increasing amount, from information relevant to the subtleties of a complex medical situation.)

Certainly, today's clinical IT will make little dent in healthcare quality and error reduction, as those issues are not in majority due to record keeping problems of paper vs. electronic, as I pointed out in my Dec, 2010 post "Is Healthcare IT a Solution to the Wrong Problem?". The expectations for today's health IT are grossly exaggerated.

How about expert systems technology such as Watson? Regarding NLP and fact retrieval 'tours de force' like IBM Watson, medicine is about cognition, about human judgment born of experience in dealing with ambiguity not just of language but of observations, findings, lab data, image interpretation, etc., about human intuition and assemblage and integration of a huge amount of disparate information in ways not well understood even by its practitioners. The end result is not just recall of a piece of information.

I consider a statement such as:

... a Watson-like system can take that information and co-relate it against all the medical journals and relevant information, and say, "Here's what I think [actually, as mentioned previously, here's the results of the algorithms - ed.] and why"

... to imply just as grandiose a valuation to the technology as the statements I heard a decade ago about the health IT of the day - or even today - "revolutionizing medicine."

I'm not even sure such a capability would be very useful; we already have DXplain developed by domain experts over decades, and that's not had a major impact on healthcare to date.

The real breakthrough will be when a cybernetic expert system can take, say, cases from the Case Records of the Massachusetts General Hospital in the NEJM verbatim, and compete as a peer (e.g., as a peer not recognized to be a machine) with a round-table panel of expert physicians with facile access to the medical literature (e.g., PubMed) on the differential diagnosis, how to establish the diagnosis and to rule out others, the treatment strategies, and the likely outcomes, and then participate in the care.

When this happens (call this the "NEJM Turing Test"), and when such capabilities are affordable and widely available, then medicine will have been revolutionized.

Of course, the enormity of the hardware and the algorithmic advances required to make a truly "revolutionary" tool such as this are obviously staggering. Considering that it takes 10 racks of multiprocessor IBM servers with 15 terabytes of memory and a team of varied domain experts writing algorithms for several years to accomplish the NLP advances and lookups to answer Jeopardy-style trivia questions, one can only imagine what a truly useful cybernetic medical assistance system would look like.

It should also be remembered that Watson does not think. Humans do. Cybernetic Jeopardy and chess playing accomplishments notwithstanding, I believe a machine even close to passing a "NEJM Turing test" will be a long time in coming. Until then, we should be encouraging better support for human physicians struggling to use their medical expertise in a sea of bureaucracy, stress and overwork (part of which will increasingly be a struggle with mission hostile health IT).

Finally, far off as I believe it to be, I do think the hundreds of billions of dollars being devoted to today's health IT would be better devoted to developing a "Dr. Watson" that can pass a medical Turing test as described above, than deploying mission hostile, primitive HIT and developing an unsustainable mega-bureaucracy to support it as in my post here.

This is not to minimize the wonderful accomplishments of the Watson team. I just wish predictions of cybernetic miracles in medicine would be held in abeyance after the lessons of the past fifty years of computers in medicine.

In the meantime, perhaps the current Watson might be useful in remediating our very sclerotic and moribund "mainsteam media," especially in the domain of politics. Its reporters and writers can certainly use cybernetic help in their fact-checking and logic, Jeopardy-style, far more so than physicians.

-- SS

Feb. 23, 2011 addendum:

Gevalt!

From the article "IBM's Watson could usher in new era of medicine", Sharon Gaudin, Computerworld, February 17, 2011:

Jennifer Chu-Carroll, an IBM researcher on the Watson project, said the computer system is a perfect fit for the healthcare field ... Think of some version of Watson being a physician's assistant," Chu-Carroll said. "In its spare time, Watson can read all the latest medical journals and get updated. Then it can go with the doctor into exam rooms and listen in as patients tell doctors about their symptoms. It can start coming up with hypotheses about what ails the patient.

Gevalt indeed ... incredible irrational exuberance. We can easily go from Jeopardy to Medicine ... and then to the Moon, in a hot air balloon! (The moon is up, hot air balloons go up, what's the problem?)

How many patients has Chu-Carroll seen lately?

(Her bio at http://researcher.ibm.com/researcher/view.php?person=us-jencc was initially down at this moment; "The server at researcher.ibm.com is taking too long to respond." Watson must be taking a nap. Archive.org says "The connection has timed out. The server at web.archive.org is taking too long to respond." Just like a doctor!)

Anyway, it now appears:

I am a Research Staff Member at IBM T. J. Watson Research Center. I also manage the Knowledge Structures group which focuses on improving advanced search technology through the use of natural language processing and machine learning techniques. Prior to joining IBM in 2001, I spent 5 years as a Member of Technical Staff at Lucent Technologies Bell Laboratories. My research interests include question answering, semantic search, natural language discourse processing, and spoken dialogue management.

Please, please, please, computer scientists: STOP MAKING THESE PREDICTIONS OF CYBERNETIC MIRACLES JUST AROUND THE CORNER. YOU'VE BEEN DOING IT SINCE THE VACUUM TUBE-BASED MACHINES.

STOP! PLEASE!

(Maybe first they could give us a computer that does a perfect, lucid, coherent, fluent translation of, say, Russian-to-English, another promise made since the 1950's "Robby the Robot" years?)

Robby the Robot in "Forbidden Planet." Lost in Space fans will remember Robby as doing battle with the Class M-3 Model B9, General Utility Non-Theorizing Environmental Control Robot ("Danger, Danger, Will Robinson") as well!

Robby the Robot in "Forbidden Planet." Lost in Space fans will remember Robby as doing battle with the Class M-3 Model B9, General Utility Non-Theorizing Environmental Control Robot ("Danger, Danger, Will Robinson") as well!I think the essay in today's WSJ by UC Berkeley philosopher John Searle is also apropos: Watson Doesn't Know It Won on 'Jeopardy!'

Feb. 25, 2011 addendum: See my Wall Street Journal Letter to the Editor on these matters at this link.

-- SS